DMVPN animation

January 2, 2014 5 Comments

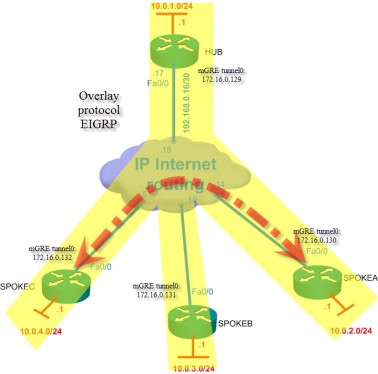

Here is an interactive animation of DMVPN (Dynamic Multipoint VPN), followed by a detailed offline lab (a snapshot of the topology under test with hopefully all commands needed for analysis and study).

Finally, check your understanding of the fundamental concepts by taking a small quiz.

Studied topology:

Animation

http://hpnouri.free.fr/dmvpn/DMVPN.swf

Offline Lab

http://hpnouri.free.fr/dmvpn/offlinelabv1025.swf

You might consider the following key points for troubleshooting:

Routing protocols:

To avoid RPF failure, announce routing protocols only through tunnel interfaces.

EIGRP

- Turn off “next-hop-self” to makes spokes speak directly. Without it traffic between spokes will always pass through the HUB and NHRP resolution will not occur.

- Turn off “split-horizon” to allow eigrp to advertise a received route from one spoke to another spoke through the same interface.

- Turn off sumarization

- Pay attention to the bandwidth required for EIGRP communication. requires BW > tunnel default BW “bandwidth 1000”

OSPF

- “ip ospf network point-to-multipoint”, allows only phase1 (Spokes Data plane communication through the HUB)

- “ip ospf broadcast” on all routers allows Phase2 (Direct Spoke-to-spoke Data plane communication)

- Set the ospf priority on the HUBs (DR/BDR) to be bigger than the priority on spokes (“ip ospf priority 0”).

- Make sure OSPF timers match if spokes and the HUB use different OSPF types.

- Because spokes are generally low-end devices, they probably can’t cope with LSA flooding generated within the OSPF domain. Therefore, it’s recommended to make areas Stubby (filter-in LSA5 from external areas) or totally stubby (neither LSA5 nor inter-area LSA3 are accepted)

Make sure appropriate MTU value matches between tunnel interfaces (“ip mtu 1400 / ip tcp mss-adjust 1360”)

Consider the OSPF scalability limitation (50 routers per area). OSPF requires much more tweekening for large scale deployments.

Layered approach:

DMVPN involves multiple layers of technologies (mGRE, routing, NHRP, IPSec), troubleshooting an issue can be very tricky.

To avoid cascading errors, test your configuration after each step and move forward only when the current step works fine. For example: IPSec encryption is not required to the functioning of DMVPN, so make sure your configuration works without it and only then you add it (set IPSEc parameters and just add “tunnel protection ipsec profile” to the tunnel interface).