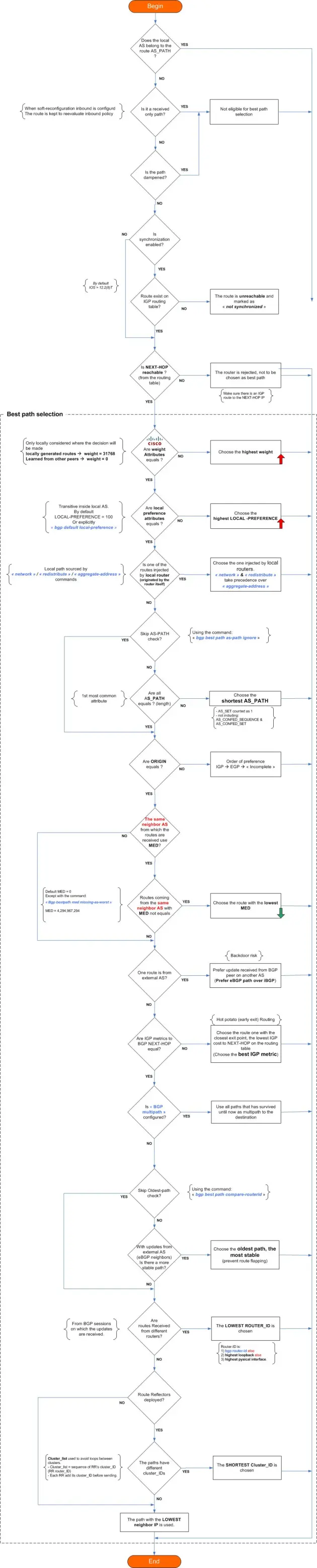

BGP best path selection

November 10, 2018 2 Comments

The complexity and the efficiency of BGP reside in the concept of route “attributes” and the way the protocol juggles them to determine the best path.

This is a quick guide (refresh of an old article), still very actual for those dealing with BGP design.

I hope the following Cisco BGP best path selection diagram will be of help: